Ritchey-Common Test & Similar Methods Using AI Image Space Phase Retrieval

In this document, we will describe a general approach/method to measure optical surfaces in reflection and/or transmission when the incoming beam is not a simple plane wave. Among such tests are the Hindle test for measuring convex surfaces and the Ritchey-Common (RC) test for flat, concave, or convex surfaces. We will explain the concept using the RC test as a general example. However, it should be obvious to anyone skilled in the field of optical metrology and related measurements that the method described in this document is not limited to the specific configurations mentioned. It can also be applied to other setups involving a measuring beam, or beams (either incoming and/or outgoing) that are not plane waves (collimated beams). In more general terms, this method deals with configurations where the relationship between the wavefront of the beam, or beams (incoming or return beam, or beams) used to measure the surface and the surface itself is not trivial, unlike a simple factor. The wavefront encodes the surface in a complex and often non-linear way. The next part of the document is an example of the implementation of such an approach, but it is not a limitation or exhaustive in any way.

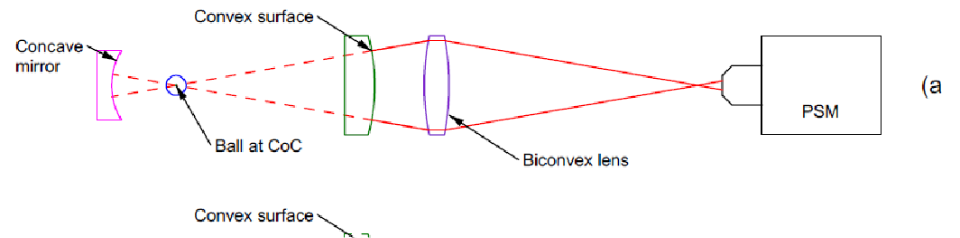

The Ritchey-Common (RC) test is a widely used optical method for testing large plane mirrors, initially proposed by A. A. Common in 1888 and detailed by G. H. Ritchey in 1904. The RC test extends the Foucault test for spherical surfaces by placing a flat mirror in the path of a diverging beam reflected off a spherical mirror. If the reference sphere is perfect, the setup is auto-stigmatic, perfectly imaging a point source back on itself. Residual power in the flat introduces some astigmatism too. Today the RC test is often done using interferometry, positioning the point source at the reference sphere’s center of curvature. This approach generates interferograms that require specific data reduction techniques due to the unique setup where light does not strike the test piece at normal incidence. See figure #1 below for RC typical setup.

Figure #1: Ritchey-Common (RC) test configuration

The source setup creates an outgoing spherical diverging beam to be reflected by the surface under test and the reference spherical mirror, resulting in an incoming (return) converging beam. The source is positioned at the center of curvature (CC) of the spherical mirror, which acts as a reference. In the simple case where the surface under test is flat, the return incoming beam is imaged at the spherical mirror’s CC, or near it.

In the context of interferometry, a transmission sphere can be used to create the outgoing beam, which eventually interferes with the incoming beam reflected back from the spherical mirror and the surface under test. This setup constitutes a double-pass test. The angle theta, known as Ritchey’s angle, is critical in this setup. The combination of the diverging nature of the outgoing beam and the angle theta (typically not zero in the RC test) creates a specific non-linear mapping between the profile of the surface under test and the wavefront of the incoming beam. This mapping is a crucial aspect of the RC test and similar tests. Data reduction is necessary to relate the measured wavefront (obtained from an interferometer, a wavefront sensor, or using image space phase retrieval or phase diversity methods) to the optical surface deformation (profile) under test. Several mappings have been developed to address this issue, such as those proposed by T. A. Fritz (see “Interferometric Evaluation for a Ritchey-Common Test Configuration,” M.S. Thesis, U. Arizona, Tucson, 1980). In this document, we propose a method to directly retrieve the surface profile from the image formed by the incoming beam using AI. This is related to Innovations Foresight’s AI4Wave technology, which has been used for phase retrieval (U.S. Patent 11,300,445 – System and Method for Wavefront Sensing with Engineered Images). Here, the RC test is used as an example of a possible implementation of such an approach, but it is not a limitation. Also, this approach can be implemented in reflection, transmission, or a combination of both. For instance, it can be used to measure both sides of a transparent surface, including its thickness.

Using RC test mapping formulas (exact or approximated) or ray tracing techniques, one can compute the return incoming beam and its wavefront for a given profile of the surface under test. By applying scalar diffraction theory, one can then compute the image formed by the incoming beam at or near the spherical mirror CC. In the context of phase retrieval, we typically use a defocused image, either intra or extra-focal, though other phase modulations can also be used. The general idea disclosed here is to use such an image to directly retrieve the surface profile without having to map it from the wavefront, not even needing to know the wavefront. However we can also retrieve the wavefront if needed. One interesting aspect of using image space wavefront sensing (also known as phase retrieval when using a single image and phase diversity if one uses several images at different defocus positions) is that for weak incoming return beams, we do not face, nor need to deal with, any intensity mismatch between the incoming and outgoing (reference) beams, as we would with interferometry. This issue can be quite challenging if the surface is uncoated (clear) since the return beam could exhibit very low intensity. In the context of image space wavefront sensing, there is no interference, and we can always improve the signal-to-noise ratio (SNR) by increasing the exposure time of the camera capturing the image of the incoming beam. Also, since there is no interference, by nature this approach is very robust against vibration and other artefacts.

For simplicity, let’s assume the surface under test is circular, and its deviation from a perfect flat surface is described by a set of Zernike polynomials and their coefficients. This is not a limitation of the method; other shapes can also be used. Additionally, other methods for describing surface deviation from flatness can be employed besides Zernike polynomials. For instance, one could sample the surface at specific known locations instead of using the Zernike parametric description. We could also combine the Zernike polynomial parametric surface departure description with other methods. In the context of a circular surface and using Zernike polynomials, we can compute the incoming wavefront error due to the surface under test using ray tracing, for instance. This calculation uses the Zernike polynomials related to the surface under test and the knowledge of the test setup (theta angle, spherical mirror, including its own surface error, as well as the entire test system geometry). In short, this means that the wavefront error WF(u,v) of the incoming beam at some location along the return path after the second reflection from the surface under test is a function of the surface S(x,y) departure (error) from a flat surface. Here, x and y refer to the 2D position on the surface under test, and u and v to the 2D position on the wavefront error measured on a plane orthogonal to the return beam path at some location z along it. We have:

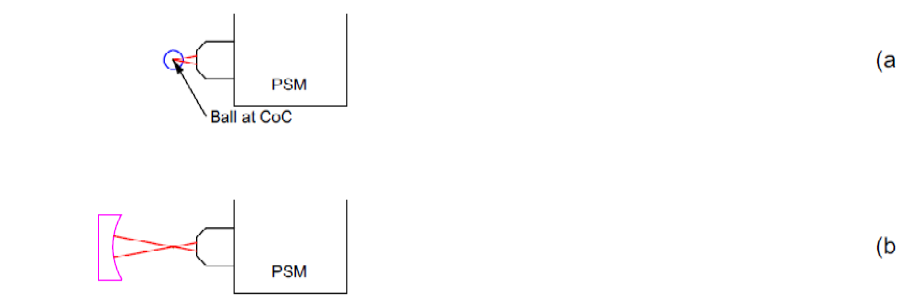

WF(u,v,z) = fmap(S(x,y))

fmap is the function relating the surface errors to the wavefront error (departure between a perfect spherical wavefront and the actual wavefront). As mentioned before, for image space phase retrieval, one typically uses a defocused image (this is not a limitation of the method here other phase modulation could be used) either before the mirror CC or after the mirror CC. By choosing the z value to be close to the spherical mirror CC with some known defocus modulation (some distance away from CC), we can compute the wavefront of the incoming (return) beam using exact ray tracing calculations (or some mapping functions) and then the diffraction pattern using the Fourier transform of the WF. The WF is obviously limited in extension by the surface under test diameter d or by the spherical mirror diameter D. One of them acts as a stop in the system. In the most common situation, the surface acts as a stop, which means that the WF is defined (non-zero) inside an elliptical-like shape (resulting from the mapping of the circular surface for a given theta angle, spherical mirror radius, and diameter D). The figure #2 below shows both cases with a system stop defined by the circular surface under test or by the spherical mirror. We can clearly see that when the circular surface under test (circular is just an example, not a limitation) is the system’s stop, the resulting defocused image is elliptical in nature. Its exact shape is more complex than an ellipse because the surface stops a divergent spherical beam. When a circular stop is placed in front of the spherical mirror, becoming the system’s stop, as expected, we now have a round (circular) defocused image. The shape of the defocused image does not matter for the description of this method. When creating the samples for the training, validation, and testing databases for the neural network (or any machine learning and function approximation tools) used by AI to learn the inverse function relating the defocused image to the Zernike polynomial coefficients (or other ways to describe the surface under test error/shape), we will automatically account for any shape of the defocused image and therefore the surface under test. It should be understood that using mapping functions or ray tracing, we can express the return (incoming) beam phase error relative to a perfect spherical wavefront (if there is no aberration and a perfectly flat surface).

We can also express the magnitude of the return beam, basically the region where it is non-zero (here we assume that we have uniform illumination of the surface under test, yet this is not a limitation; we could handle any beam shape, like a Gaussian beam as well). This will define the pupil shape (circular, elliptical, or any other shape, including obstructions, if any) used to compute the diffracted defocused image through the 2D Fourier transform, as discussed above. It should be understood that using the Fourier transform is a convenient way to compute the diffraction pattern (typically a defocused image), but other techniques can be used, especially in the context of large angles where we cannot assume sin(x) ≈ x (small angle approximation) and similar approximations. We are also not limited to scalar diffraction theory; although it is the most common approach in most applications, it is not a limitation of this method. All we need is to compute sufficiently accurate defocused images (including noise and artifacts, if any) to be able to compute the inverse function through machine learning (function approximation) relating the image to the surface under test to some level of accuracy.

Figure #2: RC test defocused shape versus system’s stop location (theta = 90 degrees)

In Figure 2, the sensor is placed behind the source on axis for simplicity. In practical implementation, one can use a beam splitter in front of the source to separate the outgoing and incoming beams, or slightly tilt the surface, or the mirror or the source, or use a combination of these methods to offset the return image from the source. This can be done with minimal aberrations, which, if present, can be subtracted after proper calibration. The next figure #3 shows some examples of defocused images for some basic deformations of a flat circular surface described by the Zernike polynomials. Here theta, the Ritchey’s angle, has been set to 30 degrees and the outgoing beam at f/2. The circular surface under test is the system’s stop.

Figure #3: RC test surface error 2D heat plot, related defocused images, and wavefront 2D heat plots. Theta = 30°, beam at f/2. Surface departure expressed using circular Zernike polynomials, all values in wave RMS.

As discussed above, using ray tracing or other mapping functions, we compute the return (incoming) beam wavefront and then the related defocused images (typically using the Fourier transform) for a given surface departure (from flat). This departure can be conveniently expressed with the Zernike polynomials (but this is not a limitation). By selecting a defocus bias (in this example, 3 wave RMS), we create the necessary phase modulation to ensure the

uniqueness of the solution, meaning there is a unique image related to a unique wavefront and

therefore to a unique surface departure (deformation). This ensures convergence to an accurate

solution during the training of the neural network or equivalent function approximation tool.

We typically build a training, validation, and test database with only simulated images; we do

not need actual images for this method (although we could use some or mix synthetic images

with actual ones if we choose). Pure simulations using the above mathematics allow for the

creation of many samples (many millions) in order to sample well the inverse function for a set

of Zernike polynomials and related coefficients used to describe the surface departure (or other

methods). This is essentially identical to the approach used in Innovations Foresight’s AI4Wave

technology. Here, we reconstruct the surface instead of the wavefront from the image (we could

do both as well). This is a direct approach that does not need to solve any non-linear problems or

equivalents at run time. Once the training of the neural network has been done and validated, the

calculation of the surface departure (the Zernike coefficients or similar) from a given defocused

image is extremely fast, taking only a few milliseconds on most simple PCs. Another important

point to mention is that since there is no run-time optimization, we do not have to deal with local

minima and good enough initial guesses. All the performance, accuracy, and bias were already

validated during the training of the neural network, or any relevant function approximation

algorithms. This validation of the performance can be done with as many samples as we may

want since we can compute them.

Figure #4: Synopsis of training the inverse function relating defocused images to surface

departure from flat, using Zernike polynomials.

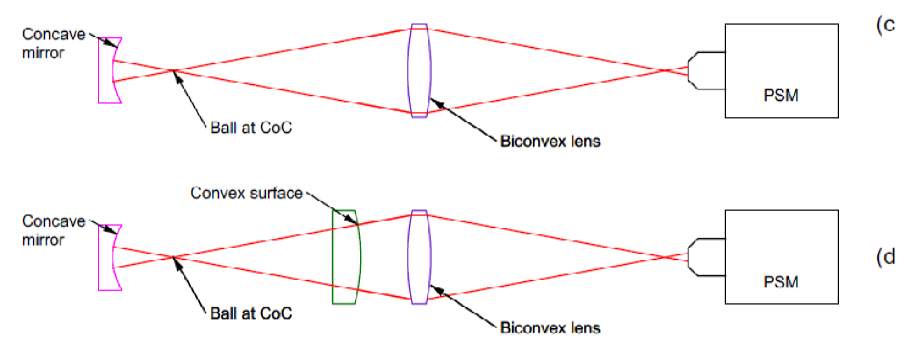

So far, we have considered the RC test to measure the reflection of a flat surface. However, the

same concept can be used to measure both the surface and the thickness, as well as the wedge, of

both sides of an opaque (yet reflective) surface or, more interestingly, a transparent flat window of any shape. For simplicity, we are considering a circular window, but this is not a limitation. The simplest approach would be to use two RC tests based on the specular reflection of the window, as shown in Figure #5 below (with theta set at 45 degrees for convenience). The first spherical outgoing beam is depicted in red, while the second is in green.

Figure #5: Double-Sided Reflecting RCT Test

One drawback of this approach is the need for two reference spherical mirrors and sources. In the context of a transparent flat window under test, there is the option to use a reflective and transmissive test. The former is a classic RC test, while the latter is a modified version of the RC test. Figure #6 below shows the concept (with theta set at 45 degrees for convenience). The reflected rays are depicted in red, while the transmitted rays are in green. However, for both sources (RC test on reflection, red rays, and on transmission, green rays), we observe both reflections and transmissions on each sensor. There is only a single reference spherical mirror.

Figure #6: Flat window measurement using RC test in reflection (red rays) and transmission (green rays). Each sensor captures two primary images: one from the reflection source and one from the transmission source. Flat window’s internal reflections not considered here.

Each sensor—one for the reflection RC test side and one for the transmission RC test side—exhibits two defocused images with the same shape (system’s stop at the level of the flat window). These are the primary reflection and transmission images. For simplicity, we consider the thickness of the flat window to be infinitely small, so there are no internal reflections. Figure #7 shows the internal reflections for an on-axis ray from the reflection RC test source (on the left). These reflections also occur for the transmission RC test. They can be addressed by using a spacial filter near the mirror CCs if there is any overlap between the defocused images and their internal reflections. Such a filter is essentially a circular hole of the proper size to allow the primary incoming beams (reflection and transmission) to pass through while blocking the reflections. The latter are separated by some distance (translation) related to theta, the window thickness, and its index of refraction. In this document, we won’t consider such reflections since they can be filtered out or ignored by cropping the proper subframe on each sensor. However, we mention them here because they can also be conveniently used to gather more information about the flat window under test if necessary. For instance, the translation distance of such internal reflection images tells us about the window thickness, among other things. It should be understood by anyone skilled in the art of engineering that we could process such images from these reflections in the same manner using AI, or equivalent tools, to retrieve information about the flat window’s surfaces, thickness, and wedge angle. This may improve accuracy and SNR, for instance. Those reflections can be combined with the primary image or not depending on the context.

Figure #7: Some internal reflections from the flat window under test. The spherical mirror (which should be located at the bottom of this figure) has been omitted for simplicity. The incoming rays are therefore not considered here.

In the context of the RC test in reflection and transmission depicted in Figure #6 above, we notice that we have reflection and transmission information even with a single source. The decision to use one or two sources depends on the nature of the information we want to retrieve and the nature of the surface under test. In short, we could use a single source (either the reflection or transmission one) if there is no ambiguity in the wavefront reconstruction when using the defocused image, or images, and therefore no ambiguity in the information we are looking for, such as surface departure from flatness, thickness, or wedge. We could also use a single sensor with a single source or any combination of those (one/two sources, one/two sensors). The final decision is related to the above question of the uniqueness of the solution for the inverse problem, known information on the surfaces, constraints, as well as SNR and test geometry. Therefore, it should be understood that even if we describe a system with two sources and two sensors, this is not a limitation of the method but rather a general description using some implementation (embodiment).

When having more than one image (from one or more sensor, or over time, or from several wavelengths, or a combination), data fusion for the artificial neural network (ANN) or a similar function approximation tool can be done in different ways, including using one or multiple ANNs. In one embodiment, a unique composite image features several defocused images from one or two sensors, or more and one or two sources, or more, or over time, or over wavelengths (including all combinations). This composite image is provided to the ANN for calculating the characteristics of the surface under test, like depicted in figure #4. Among the outputs, but not limited to, will be the first side surface departure or equivalent from flatness or any expected profile or just the absolute profile. This can be done, for instance, using the Zernike polynomials as in figure #4, but this is merely an example, not a limitation. Other information could include the second (other) side surface departure from flatness or any expected profile or just the absolute profile. We may also want to extract the surface under test thickness and any wedge. Other information may be extracted, such as surface index of refraction, material index of refraction, or several indices of refraction in the surface is made of various material or gradient of index of refraction as well as the optical extinction in the context of a somewhat transparent window. We may also want to know the surface polarization effect in terms of reflection and/or transmission. The existence of surface defects (localized high spatial frequencies, defects, or structures) as well as any internal defects or scattering properties may also be estimated from the composite image. We describe in the document monochromatic illumination implementations, but this is not a limitation either. We could have several wavelengths or a broad spectrum or a combination of any sources. We could also have other sources located at different positions with other sensors; the typical configuration of two sources presented here is not a limitation either. In the context of several ANNs, we could feed one from the output of another or provide several separate images to a given ANN instead of a single composite image. Any combination of these is possible depending on the problem and expected performance. It is also possible to use ANN or equivalent as time series to process images and/or data from iterations. One possible implementation could be similar to transformers used in language models. All the above implementations, combinations, and permutations are understood to be well known by anyone skilled in the art of machine learning and optimization.

In some situations, we may want, with the same system, to measure both the entire surface under test as described in the above example and discussion or to measure a smaller area of the surface on either side. In this context, we describe a novel approach using a movable stop of some shape conveniently designed to measure some defined area shape (like a disk, a rectangle, an ellipse, or any other shape) of the surface under test. The stop could be made of several non-adjacent holes too; it is not limited to a single one, whatever its shape. Such a stop may be moved to measure or scan the surface at several locations, with or without overlaps. The stop could have a variable shape and/or size too. A convenient location for such a stop, but not a limitation, is to place it in front of the reference spherical mirror. We could also have several stops combined with several spherical mirrors.

Figure #8 below shows an implementation, as an example, in the context of the RC test combining reflection and transmission as depicted in figure #6. Here the stop is placed in front of the unique reference spherical mirror. It is understood that the stop can be moved freely in any direction (3D) and its shape changed as well. Figure #8 is just an example, not a limitation, of such an implementation. The number of sources, mirrors, sensors, and stops, their shapes and positions, as well as the wavelengths in use, can all be tailored for a given goal.

One very interesting feature of having a movable, or/and changing shape, stop is the ability to combine full-surface measurements, or several image with different stop configurations and locations, or both, with local data from image taken with a stop in place (smaller aera in the surface under test). This allows the separation of any defocus errors seen by the image (or interferograms or any other means) from the RC test setup versus any power (quadratic departure) from the surface under test. In a normal RC test, we need to take at least two data sets (images, or interferogram, or others) in different configurations, for instance, by rotating the surface under test or changing the angle theta, or both, to separate any defocus observed in the image between the surface under test contribution and the setup defocus error contribution.

The moving stop provides a very convenient way to solve this issue without moving anything else but the stop or just changing the stop shape, or both. This offers a valuable advantage in terms of accuracy and speed. A small stop also allows retrieving high spatial frequency properties of the surface under test, such as roughness. It should be remembered that the defocused image (or phase-modulated engineered image) carries a lot of information about the wavefront phase, and therefore the surface departure in our case, besides piston. This includes roughness as well. It is understood that the images from the moving stop can be processed in the same way as the full surface images. Combining full images and/or stitching the images from the moving stop are options as well. We could equip the stop with some optics or CGH in some configurations. We could also use a mask instead of a simple stop near the reference spherical mirror (or elsewhere in the system, or several masks) for performing sparse aperture imaging and interferometry (like a Hartmann’s mask, for example) and/or as a coded aperture. Such a mask, or masks, could be moved and/or changed over time.

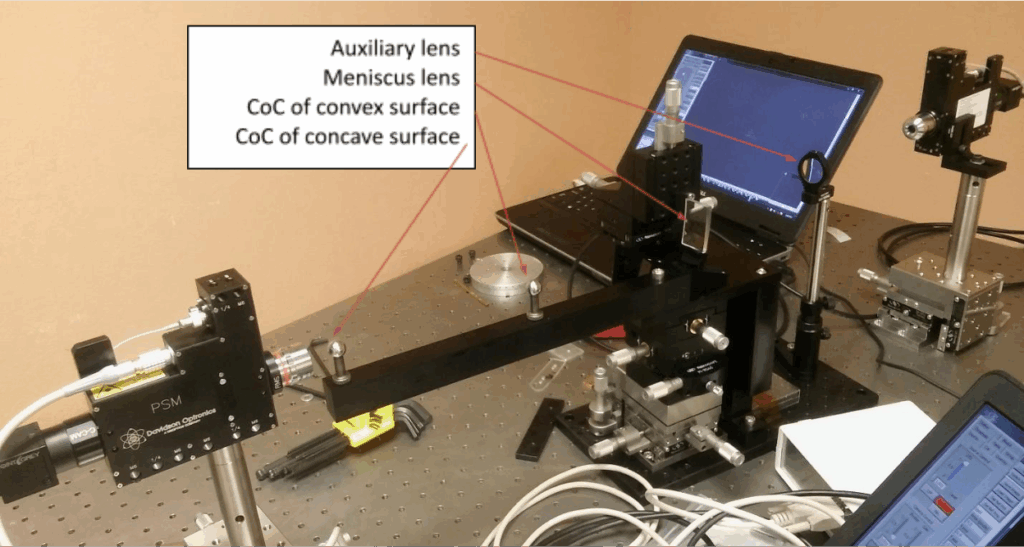

Finally, in this document, we have omitted some auxiliary optics that can be used for magnification of the image for a given camera and sensor pixel size, or to perform wavelength and/or spatial filtering. These include the possibility to correct for coma and astigmatism in the context of a diverging spherical wave passing through a transparent flat surface (a window). We could use such optics to cancel these known aberrations for the nominal, expected thickness, wedge and index of refraction of the window so that the image will only carry departures from those values and the surface itself. Total or partial cancelation of the wavefront errors due to the system optical aberrations and/or the transmission through the flat window can be done in many ways, including by using computer-generated holograms (CGH). All possible auxiliary optics are well known to anyone skilled in the art of optics.

Figure #8: RC test with a moving stop in front of the spherical mirror. The defocused images exhibit a circular shape since the stop acts as the system stop now.